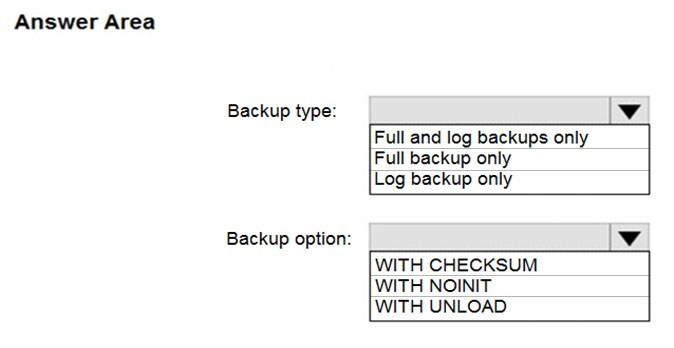

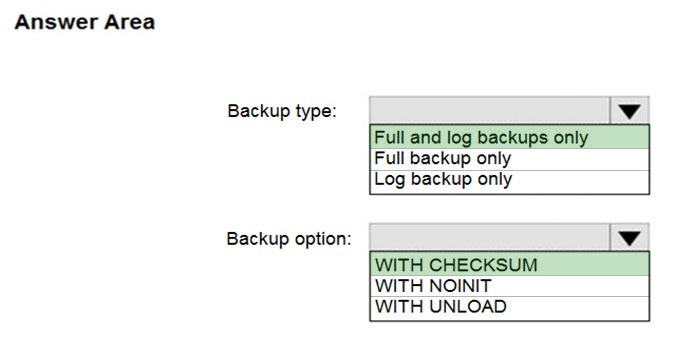

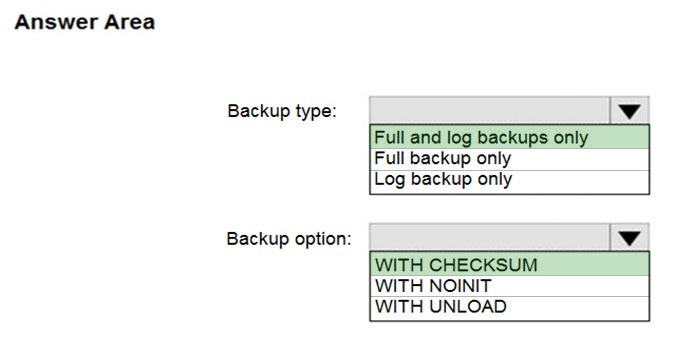

Box 1: Full and log backups only

Make sure to take every backup on a separate backup media (backup files). Azure Database Migration Service doesn't support backups that are appended to a single backup file. Take full backup and log backups to separate backup files.

Box 2: WITH CHECKSUM -

Azure Database Migration Service uses the backup and restore method to migrate your on-premises databases to SQL Managed Instance. Azure Database

Migration Service only supports backups created using checksum.

Incorrect Answers:

NOINIT Indicates that the backup set is appended to the specified media set, preserving existing backup sets. If a media password is defined for the media set, the password must be supplied. NOINIT is the default.

UNLOAD -

Specifies that the tape is automatically rewound and unloaded when the backup is finished. UNLOAD is the default when a session begins.

Reference:

https://docs.microsoft.com/en-us/azure/dms/known-issues-azure-sql-db-managed-instance-online