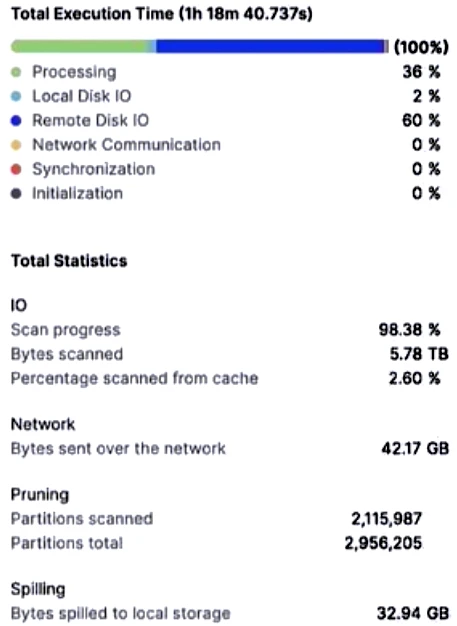

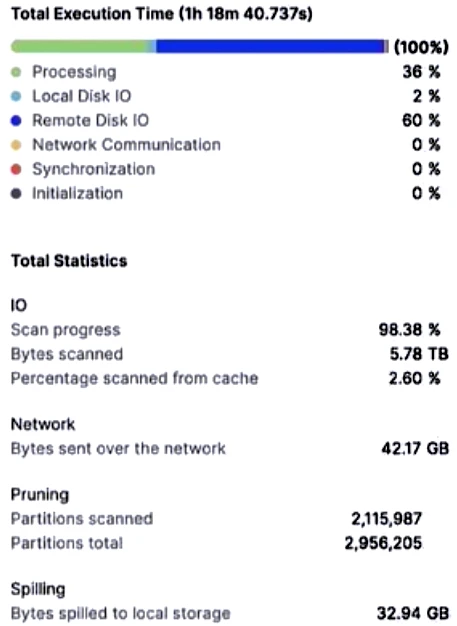

Using a size 2XL virtual warehouse, this query took over an hour to complete.

What will improve the query performance the MOST?

To improve the query performance the most, adding a date column as a cluster key on the table would be the best option. This approach helps to reduce the number of partitions scanned when filtering by specific dates because clustering organizes data based on the values in the key column, leading to more efficient querying. Increasing the size of the virtual warehouse or the number of clusters might provide some improvement in processing power but would not address the underlying issue of scanning too many partitions, as seen in the high number of partitions scanned in the Query Profile.

When working with Snowflake in AWS eu-west-1 (Ireland), an internal stage must reside in the same region as the Snowflake account. Thus, internal stages in other regions or cloud providers, such as GCP, are invalid. However, external stages are not bound to this restriction and can be located in different regions or even different cloud providers. Therefore, using internal stages in fremagne locations or cloud providers is invalid. In this context, a valid source would be an external stage on AWS or another cloud provider in various regions. Hence, the valid sources are 'Internal stage on AWS eu-central-1 (Frankfurt)', 'External stage in an Amazon S3 bucket on AWS eu-west-1 (Ireland)', and 'External stage in an Amazon S3 bucket on AWS eu-central-1 (Frankfurt)'. Option 'F' is invalid as Snowflake does not support direct data loading from an SSD attached to an Amazon EC2 instance.

To disable Fail-safe for all tables, you need to create a transient database. A transient database doesn't have the Fail-safe period that is associated with permanent databases. Therefore, creating a transient database as a clone of the production database will meet the requirements to disable Fail-safe for all tables.

To retrieve the 20 most recent executions of the specified task MYTASK that have been scheduled within the last hour and have ended or are currently running, the query must include a filter to ensure that only tasks that have already started are considered. This can be done by checking that the QUERY_ID is not null, as QUERY_ID is populated only when the task starts running. Without filtering on QUERY_ID, the results could include tasks that are scheduled but have not yet started. Additionally, the query should limit the results to the most recent 20 entries.

There are multiple methods for creating a DataFrame object in Snowpark. The method session.read.json() allows the creation of a DataFrame from a JSON file. The method session.table() is used to create a DataFrame from an existing table in Snowflake. The method session.sql() allows the creation of a DataFrame by executing a SQL query. While other methods are related to sessions or writing data, they do not directly pertain to creating a DataFrame.