After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You have a data warehouse that stores information about products, sales, and orders for a manufacturing company. The instance contains a database that has two tables named SalesOrderHeader and SalesOrderDetail. SalesOrderHeader has 500,000 rows and SalesOrderDetail has 3,000,000 rows.

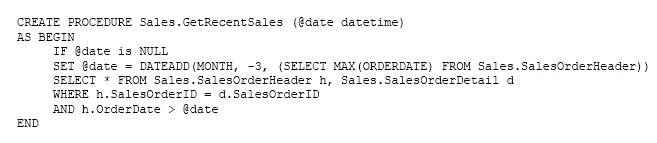

Users report performance degradation when they run the following stored procedure:

You need to optimize performance.

Solution: You run the following Transact-SQL statement:

Does the solution meet the goal?