Your weather app queries a database every 15 minutes to get the current temperature. The frontend is powered by Google App Engine and server millions of users. How should you design the frontend to respond to a database failure?

In a situation where the weather app's frontend fails to retrieve data from the database, it should implement a retry mechanism with exponential backoff. This approach involves retrying the failed query at increasing intervals, starting with a short delay and then doubling the interval each time, up to a maximum cap of 15 minutes. This method helps manage the load on the database servers and prevents overwhelming them with constant requests, allowing the system to recover smoothly. Exponential backoff strikes a balance between ensuring timely data retrieval and avoiding excessive server load and user impact, making it the most effective strategy for handling temporary database outages.

You are creating a model to predict housing prices. Due to budget constraints, you must run it on a single resource-constrained virtual machine. Which learning algorithm should you use?

When predicting housing prices, a regression model should be employed since the goal is to predict a continuous value. Linear regression is an appropriate choice for this situation, as it is simple, computationally efficient, and can run on a single resource-constrained virtual machine. It does not require the extensive computational power or specialized hardware needed by more complex algorithms like neural networks. Logistic classification, on the other hand, is used for binary classification tasks and is not suitable for predicting continuous values. Therefore, linear regression is the best fit given the constraints and the nature of the problem.

You are building new real-time data warehouse for your company and will use Google BigQuery streaming inserts. There is no guarantee that data will only be sent in once but you do have a unique ID for each row of data and an event timestamp. You want to ensure that duplicates are not included while interactively querying data. Which query type should you use?

Using the ROW_NUMBER window function with PARTITION by unique ID along with WHERE row equals 1 ensures that within each partition of rows that share the same unique ID, each row is assigned a unique incremental row number based on the specified order, typically the event timestamp. By filtering to only include rows where the row number equals 1, you effectively remove duplicates, retaining only one row per unique ID for interactive queries.

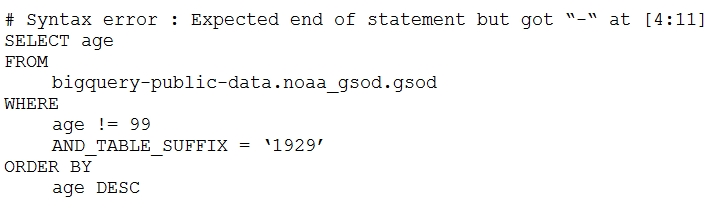

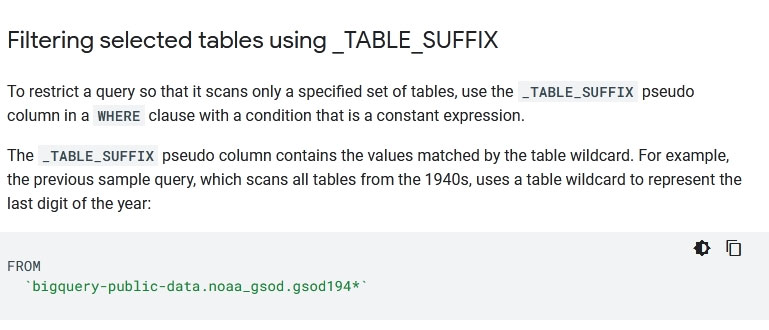

Your company is using WILDCARD tables to query data across multiple tables with similar names. The SQL statement is currently failing with the following error:

Which table name will make the SQL statement work correctly?

D

Reference:

https://cloud.google.com/bigquery/docs/wildcard-tables

Your company is in a highly regulated industry. One of your requirements is to ensure individual users have access only to the minimum amount of information required to do their jobs. You want to enforce this requirement with Google BigQuery. Which three approaches can you take? (Choose three.)

To ensure individual users have access only to the minimum amount of information required to do their jobs in a highly regulated industry using Google BigQuery, three effective approaches can be implemented. First, restrict access to tables by role by utilizing BigQuery access controls to assign specific permissions based on user roles, ensuring that users can only access data necessary for their tasks. Second, restrict BigQuery API access to approved users by leveraging Cloud Identity and Access Management (IAM) to control who can use the API, thereby limiting data access to authorized personnel. Third, segregate data across multiple tables to separate different types of data, which allows for more granular access control, ensuring users can only access appropriate datasets. Other options like disabling writes to tables, encrypting data, and using audit logging do not directly contribute to the principle of least privilege access.