HOTSPOT -

You have a self-hosted integration runtime in Azure Data Factory.

The current status of the integration runtime has the following configurations:

✑ Status: Running

✑ Type: Self-Hosted

✑ Version: 4.4.7292.1

✑ Running / Registered Node(s): 1/1

✑ High Availability Enabled: False

✑ Linked Count: 0

✑ Queue Length: 0

✑ Average Queue Duration: 0.00s

The integration runtime has the following node details:

✑ Name: X-M

✑ Status: Running

✑ Version: 4.4.7292.1

✑ Available Memory: 7697MB

✑ CPU Utilization: 6%

✑ Network (In/Out): 1.21KBps/0.83KBps

✑ Concurrent Jobs (Running/Limit): 2/14

✑ Role: Dispatcher/Worker

✑ Credential Status: In Sync

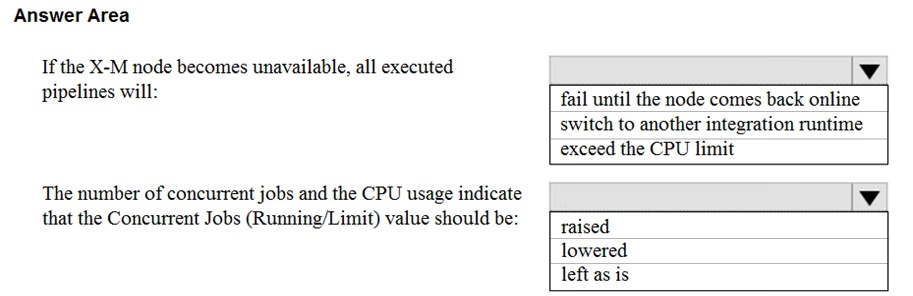

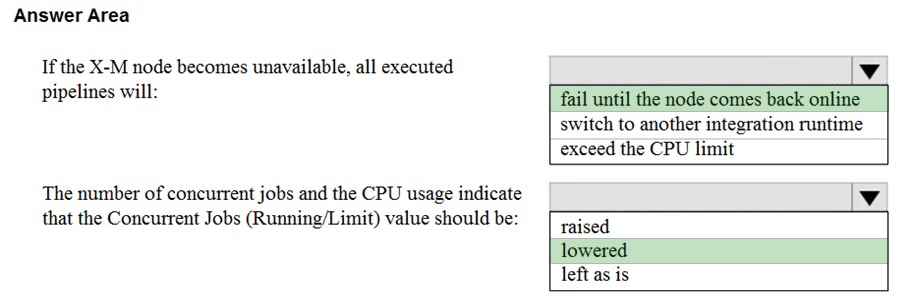

Use the drop-down menus to select the answer choice that completes each statement based on the information presented.

NOTE: Each correct selection is worth one point.

Hot Area: