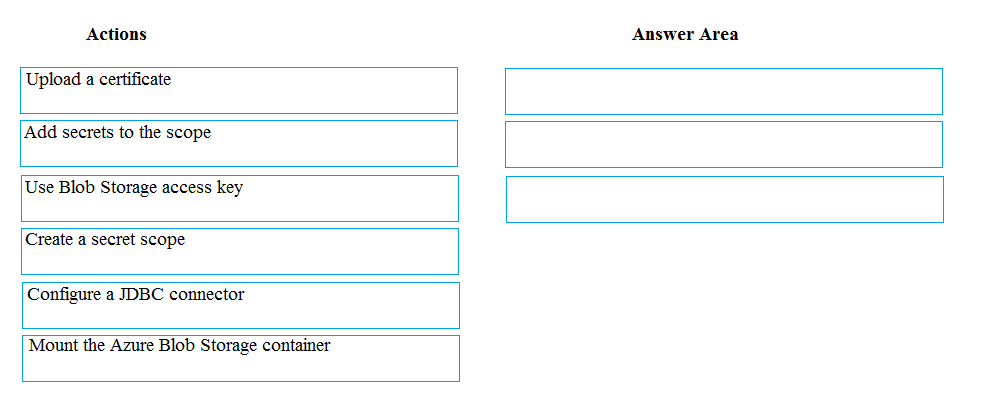

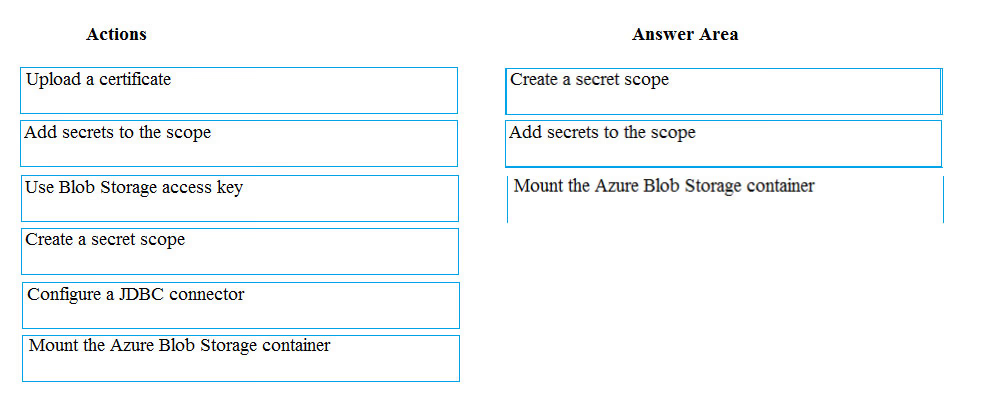

Step 1: Create a secret scope -

Step 2: Add secrets to the scope

Note: dbutils.secrets.get(scope = "", key = "") gets the key that has been stored as a secret in a secret scope.

Step 3: Mount the Azure Blob Storage container

You can mount a Blob Storage container or a folder inside a container through Databricks File System - DBFS. The mount is a pointer to a Blob Storage container, so the data is never synced locally.

Note: To mount a Blob Storage container or a folder inside a container, use the following command:

Python -

dbutils.fs.mount(

source = "wasbs://@.blob.core.windows.net", mount_point = "/mnt/", extra_configs = {"":dbutils.secrets.get(scope = "", key = "")}) where: dbutils.secrets.get(scope = "", key = "") gets the key that has been stored as a secret in a secret scope.

References:

https://docs.databricks.com/spark/latest/data-sources/azure/azure-storage.html