Case study -

This is a case study. Case studies are not timed separately. You can use as much exam time as you would like to complete each case. However, there may be additional case studies and sections on this exam. You must manage your time to ensure that you are able to complete all questions included on this exam in the time provided.

To answer the questions included in a case study, you will need to reference information that is provided in the case study. Case studies might contain exhibits and other resources that provide more information about the scenario that is described in the case study. Each question is independent of the other questions in this case study.

At the end of this case study, a review screen will appear. This screen allows you to review your answers and to make changes before you move to the next section of the exam. After you begin a new section, you cannot return to this section.

To start the case study -

To display the first question in this case study, click the Next button. Use the buttons in the left pane to explore the content of the case study before you answer the questions. Clicking these buttons displays information such as business requirements, existing environment, and problem statements. If the case study has an

All Information tab, note that the information displayed is identical to the information displayed on the subsequent tabs. When you are ready to answer a question, click the Question button to return to the question.

Overview -

XYZ is an online training provider. They also provide a yearly gaming competition for their students. The competition is held every month in different locations.

Current Environment -

The company currently has the following environment in place:

* The racing cars for the competition send their telemetry data to a MongoDB database. The telemetry data has around 100 attributes.

* A custom application is then used to transfer the data from the MongoDB database to a SQL Server 2017 database. The attribute names are changed when they are sent to the SQL Server database.

* Another application named "XYZ workflow" is then used to perform analytics on the telemetry data to look for improvements on the racing cars.

* The SQL Server 2017 database has a table named "cardata" which has around 1 TB of data. "XYZ workflow" performs the required analytics on the data in this table. Large aggregations are performed on a column of the table.

Proposed Environment -

The company now wants to move the environment to Azure. Below are the key requirements:

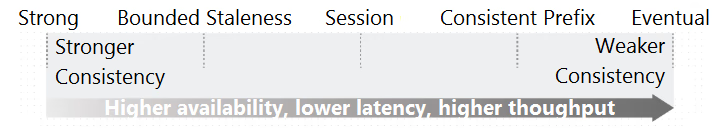

* The racing car data will now be moved to Azure Cosmos DB and Azure SQL database. The data must be written to the closest Azure data center and must converge in the least amount of time.

* The query performance for data in the Azure SQL database must be stable without the need of administrative overhead

* The data for analytics will be moved to an Azure SQL Data warehouse

* Transparent data encryption must be enabled for all data stores wherever possible

* An Azure Data Factory pipeline will be used to move data from the Cosmos DB database to the Azure SQL database. If there is a delay of more than 15 minutes for the data transfer, then configuration changes need to be made to the pipeline workflow.

* The telemetry data must be monitored for any sort of performance issues.

* The Request Units for Cosmos DB must be adjusted to maintain the demand while also minimizing costs.

* The data in the Azure SQL Server database must be protected via the following requirements:

- Only the last four digits of the values in the column CarID must be shown

- A zero value must be shown for all values in the column CarWeight

Which of the following would you use for the consistency level for the database?