You need to resolve the capacity issue.

What should you do?

You need to resolve the capacity issue.

What should you do?

D

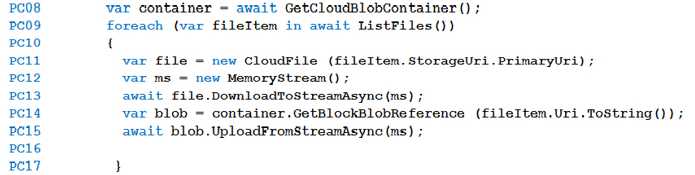

If you want to read the files in parallel, you cannot use forEach. Each of the async callback function calls does return a promise. You can await the array of promises that you'll get with Promise.all.

Scenario: Capacity issue: During busy periods, employees report long delays between the time they upload the receipt and when it appears in the web application.

References:

https://stackoverflow.com/questions/37576685/using-async-await-with-a-foreach-loop

A scaling in this case brings nothing. This Function is called once in 5 min and get a huge number of files to be processed. It’ possible to convert the loop to call an parallel processing of files or just convert a function to be triggered from files. I think both B and D can be an answer.

A is the answer. You can scale out and up with a dedicate App Service Plan. "You need more CPU or memory options than what is provided on the Consumption plan." http://twocents.nl/?p=2078 D is not the answer for this reason: "... will depend on the number of cores the machine has, and you can't control that when you're deployed to the consumption plan." https://github.com/Azure/Azure-Functions/issues/815 C is also not the answer because auto-scaling a default for consumption plans. You don't need to configuration anything, so it is already on for this scenario: https://docs.microsoft.com/en-us/azure/azure-functions/functions-scale#consumption-plan B is also not the answer, as there is not file share trigger for Azure Functions. https://stackoverflow.com/questions/50872265/is-there-any-trigger-for-azure-file-share-in-azure-functions-or-azure-logic-app

It doesn't look like there is azure fileshare triggers, there is triggers for blob storage https://docs.microsoft.com/en-us/azure/azure-functions/functions-triggers-bindings https://stackoverflow.com/questions/50872265/is-there-any-trigger-for-azure-file-share-in-azure-functions-or-azure-logic-app Also if azure fileshare trigger is supported and many files got dropped at once it would trigger many instances of the function which could have constraints. It seems it would be easier to run the function once every x minutes and process whats there in parallel Also this issue happens "During busy periods" so on non busy periods the user is fine to wait the 5 minutes that it could take for the timer to trigger to process the file

Given answer D is Correct

The correct answer is D. A is incorrect. TimerTrigger does not get faster when you change the plan.

OK, so the correct one is D: - A (incorrect): TimerTrigger does not get faster when you change the plan. - C (incorrect): Current situation, so, same reason as before (A). - B (Incorrect): There is not file share trigger for Azure Functions

How is this a solution, the stackoverflow link and the promise library are javascript solutions..

The given C# code is also async ... I think in real life switching to a dedicated app service plan with always on would be best

The C# version: 1. https://docs.microsoft.com/en-us/dotnet/standard/parallel-programming/how-to-write-a-simple-parallel-foreach-loop 2. https://stackoverflow.com/questions/15136542/parallel-foreach-with-asynchronous-lambda

C should be the correct solution since it allows scaling

Can be rewritten to someting like: public static Task Run([TimeTrigger("0 /5 * * * *")] TimerInfo timer, ILogger log) { var container = await GetCloudBlobContainer(); async Task ProcessFile(ListFileItem fileItem) { var file = new CloudFile(fileItem.StorageUri.PrimaryUri) var ms = new MemoryStream(); await file.DownloadToStramAsync(ms) var blob = container.GetBlockBlobReference(fileItem.Uri.ToString()); await blob.UploadFromStreamAsync(ms) } var fileItems = await ListFiles(); return Task.WhenAll(fileItems.AsParallel().Select(fi => ProcessFile(fi)));

The only answer I am sure is not right is D. Why? Look into requirements: Receipt processing - Concurrent processing of a receipt must be prevented. Does anyone know which answer is correct?

Concurrent != Parallel

I think that means 2 parallel processing on the same receipt.

It's C. Base on the fact "During busy periods, employees report long delays between the time they upload the receipt and when it appears in the web application.", the capacity issue doesn't come from the code algorithm, the root cause is underlying infrastructure of Azure function. https://docs.microsoft.com/en-us/azure/azure-functions/functions-scale#hosting-plans-comparison

Read the explanation: If you want to read the files in parallel, you cannot use forEach. Then read at stackoverflow: It says you can not use foreach - you need to use for Hence correct answer D - switch your loop from foreach to a for loop.

Cleared AZ-204 today, the question appeared, the option "D" was not there