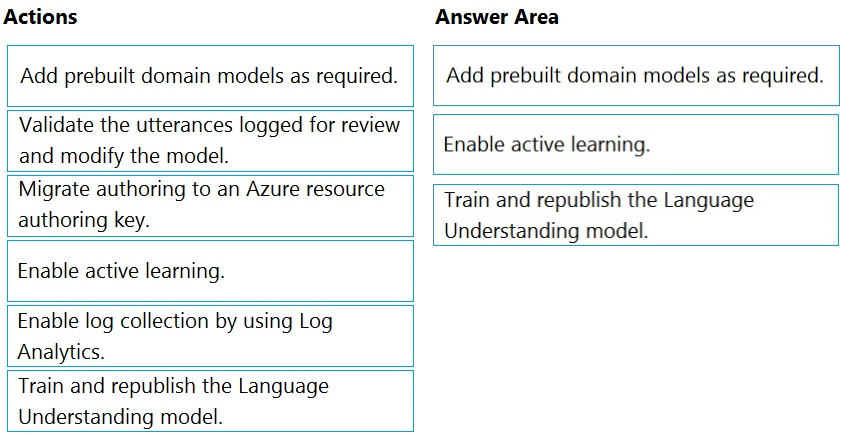

Step 1: Add prebuilt domain models as required.

Prebuilt models provide domains, intents, utterances, and entities. You can start your app with a prebuilt model or add a relevant model to your app later.

Note: Language Understanding (LUIS) provides prebuilt domains, which are pre-trained models of intents and entities that work together for domains or common categories of client applications.

The prebuilt domains are trained and ready to add to your LUIS app. The intents and entities of a prebuilt domain are fully customizable once you've added them to your app.

Step 2: Enable active learning -

To enable active learning, you must log user queries. This is accomplished by calling the endpoint query with the log=true querystring parameter and value.

Step 3: Train and republish the Language Understanding model

The process of reviewing endpoint utterances for correct predictions is called Active learning. Active learning captures endpoint queries and selects user's endpoint utterances that it is unsure of. You review these utterances to select the intent and mark entities for these real-world utterances. Accept these changes into your example utterances then train and publish. LUIS then identifies utterances more accurately.

Incorrect Answers:

Enable log collection by using Log Analytics

Application authors can choose to enable logging on the utterances that are sent to a published application. This is not done through Log Analytics.

Reference:

https://docs.microsoft.com/en-us/azure/cognitive-services/luis/luis-how-to-review-endpoint-utterances#log-user-queries-to-enable-active-learning https://docs.microsoft.com/en-us/azure/cognitive-services/luis/luis-concept-prebuilt-model