ACD

The Microsoft documentation highlights the steps required to load data from Azure Data Lake Gen2 to an Azure SQL Data warehouse.

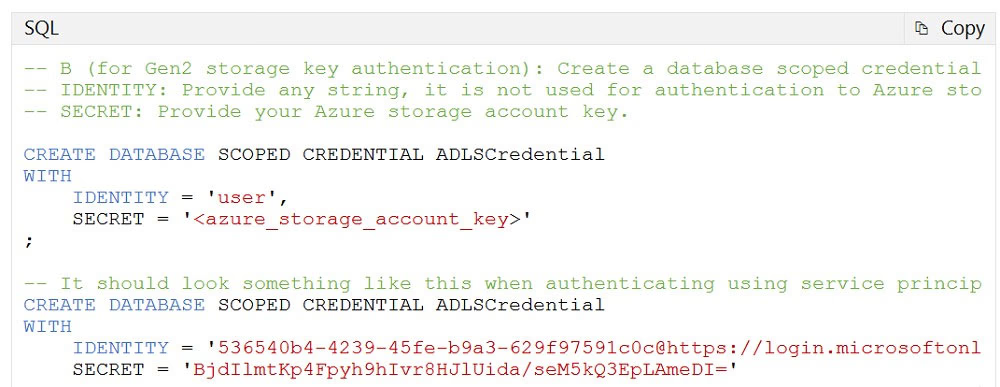

One of the steps is to create a database scoped credential:

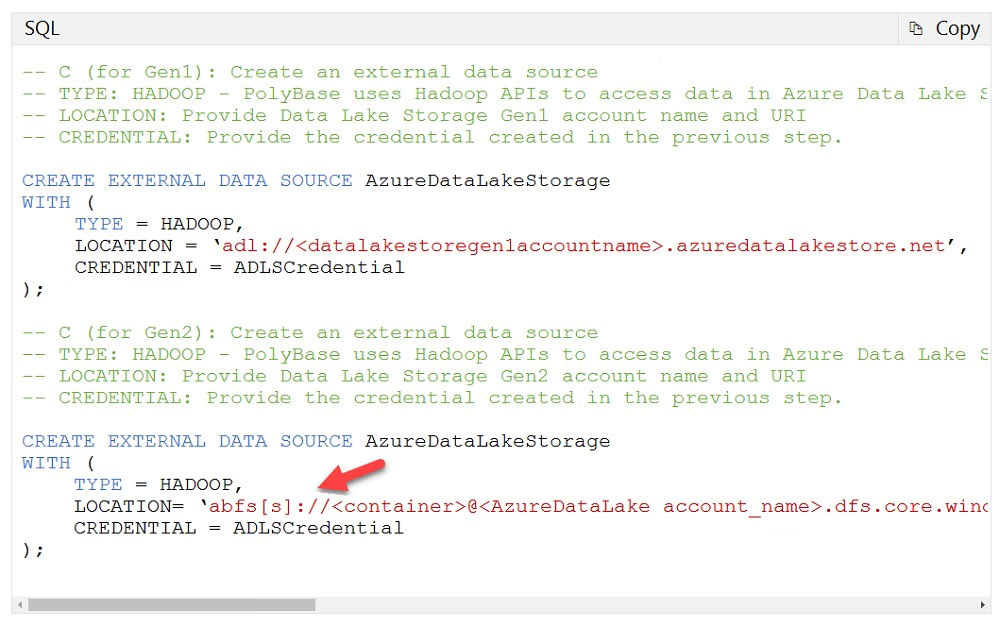

Another step is to create the external data source using 'abfs' as the file location:

Create the external data source -

Use this CREATE EXTERNAL DATA SOURCE command to store the location of the data.

And you can use the FIRST_ROW parameter to skip the first row of the file.

FIRST_ROW = First_row_int -

Specifies the row number that is read first in all files during a PolyBase load. This parameter can take values 1-15. If the value is set to two, the first row in every file (header row) is skipped when the data is loaded. Rows are skipped based on the existence of row terminators (/r/n, /r, /n). When this option is used for export, rows are added to the data to make sure the file can be read with no data loss. If the value is set to >2, the first row exported is the Column names of the external table.

Reference:

https://docs.microsoft.com/en-us/azure/sql-data-warehouse/sql-data-warehouse-load-from-azure-data-lake-store https://docs.microsoft.com/en-us/sql/t-sql/statements/create-external-file-format-transact-sql?view=sql-server-ver15