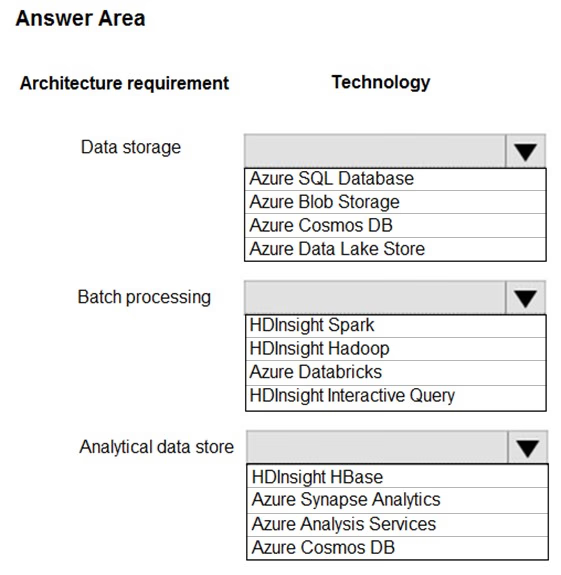

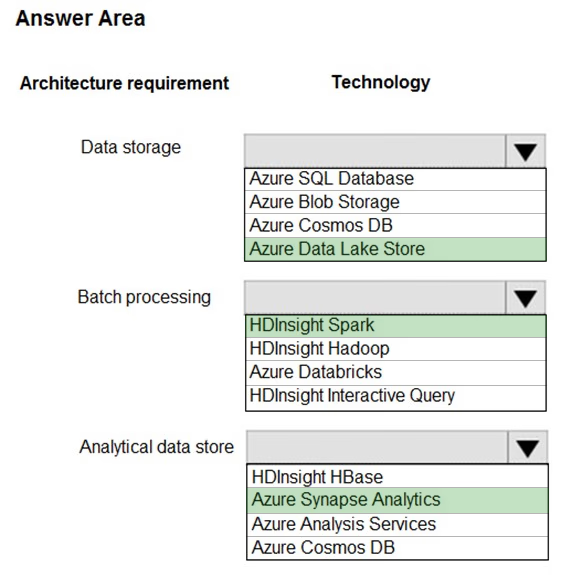

HOTSPOT -

You are developing a solution using a Lambda architecture on Microsoft Azure.

The data at rest layer must meet the following requirements:

Data storage:

✑ Serve as a repository for high volumes of large files in various formats.

✑ Implement optimized storage for big data analytics workloads.

✑ Ensure that data can be organized using a hierarchical structure.

Batch processing:

✑ Use a managed solution for in-memory computation processing.

✑ Natively support Scala, Python, and R programming languages.

✑ Provide the ability to resize and terminate the cluster automatically.

Analytical data store:

✑ Support parallel processing.

✑ Use columnar storage.

✑ Support SQL-based languages.

You need to identify the correct technologies to build the Lambda architecture.

Which technologies should you use? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Hot Area: