How should a web application be designed to work on a platform where up to 1000 requests per second can be served?

To ensure that a web application can handle up to 1000 requests per second efficiently, setting a per-user limit on requests is a suitable approach. This helps to distribute the load evenly among users and prevents any single user from overwhelming the system. Limiting the number of requests per user—such as 5 requests per minute—ensures fair usage of resources and maintains the application's responsiveness and stability. By implementing a rate limit, the system can handle high traffic while avoiding performance bottlenecks.

An organization manages a large cloud-deployed application that employs a microservices architecture across multiple data centers. Reports have been received about application slowness. The container orchestration logs show that faults have been raised in a variety of containers that caused them to fail and then spin up brand new instances.

Which two actions can improve the design of the application to identify the faults? (Choose two.)

To improve the design of the application and identify faults, implementing a tagging methodology that follows the application execution from service to service is crucial. This allows for tracing transactions through the microservices, thereby identifying where faults occur. Additionally, adding logging on exception and providing immediate notification enhances observability by capturing error details and alerting the team in real time, enabling quicker diagnosis and resolution of issues.

Which two situations are flagged by software tools designed for dependency checking in continuous integration environments, such as OWASP? (Choose two.)

Software tools designed for dependency checking in continuous integration environments, particularly those like OWASP Dependency-Check, typically focus on identifying publicly disclosed vulnerabilities related to included dependencies and checking for incompatible licenses. The identification of publicly disclosed vulnerabilities is crucial for maintaining the security of the application by preventing known issues from being introduced. Incompatible license detection is also essential as it ensures that developers adhere to licensing requirements and avoid potential legal issues.

A network operations team is using the cloud to automate some of their managed customer and branch locations. They require that all of their tooling be ephemeral by design and that the entire automation environment can be recreated without manual commands. Automation code and configuration state will be stored in git for change control and versioning. The engineering high-level plan is to use VMs in a cloud-provider environment, then configure open source tooling onto these VMs to poll, test, and configure the remote devices, as well as deploy the tooling itself.

Which configuration management and/or automation tooling is needed for this solution?

To fulfill the requirements of the network operations team for an ephemeral and fully automatable environment, a combination of Ansible and Terraform is needed. Terraform is suitable for provisioning and managing VM infrastructure in the cloud-provider environment due to its infrastructure as code (IaC) capabilities. Ansible, on the other hand, is effective for configuration management and automating tasks such as polling, testing, and configuring remote devices. Storing automation code and configuration state in git ensures change control and versioning. Both tools are open source, aligning with the requirement for open-source tooling.

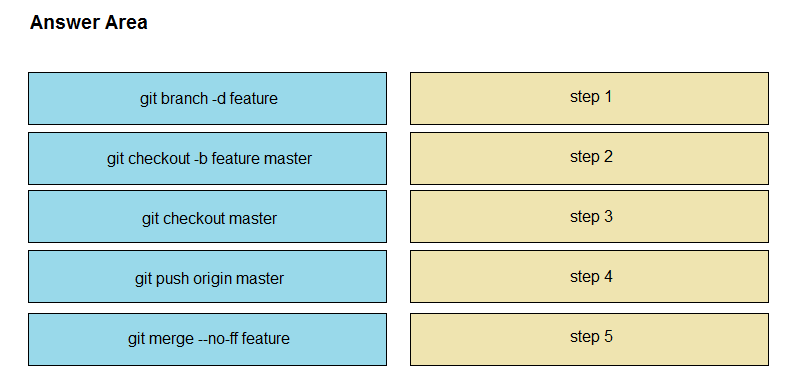

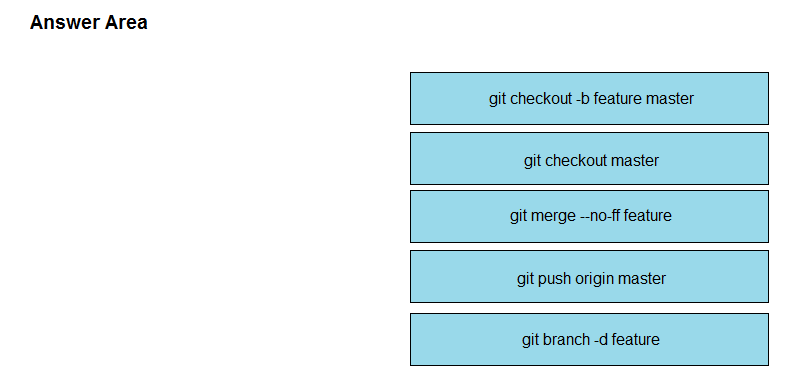

DRAG DROP -

Drag and drop the git commands from the left into the correct order on the right to create a feature branch from the master and then incorporate that feature branch into the master.

Select and Place: